After almost 6 years, 500 blog posts, and over half a million visits, I decide to have a new playground for my (often crazy) experiments. From now on, if you want to follow my ramblings, check ariya.ofilabs.com.

Thursday, July 28, 2011

Tuesday, July 26, 2011

tablets and web performance

Benchmarks, and the results of running them, are attractive because they eliminate the need to digest an arbitrary complex machinery, reducing it into a meaningful and powerful number. Comparison is thereby made easier, as it is now a matter of playing who has the biggest gun game.

In the areas of web performance, every benchmark becomes more like durian, either you hate it or you love it. No matter how useful (or useless) a benchmark is, there are always folks who defend it and others who despise it. Signal-to-noise ratio changes dramatically when the discussion over some benchmark results is started.

I still reckon that in the years to come, what makes a great experience while browsing the web depends on the performance of (surprise!) DOM access. Common JavaScript frameworks (like jQuery, Prototype, Ext JS, MooTools, YUI, Dojo, and many others) still form the basis for a lot of rich web sites and interactive web applications out there, at least for the time being and till the near future.

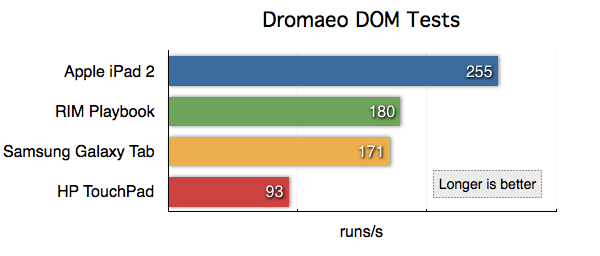

While SunSpider and V8 benchmarks are geared towards pure JavaScript performance and Kraken is better suited for future heavyweight applications, Dromaeo becomes a solid candidate for DOM performance analysis. In particular, its set of DOM tests is very valuable because it presents a nice sample of the behavior of framework-based sites. In this context, butter-smooth DOM modification has a bigger impact than just blazing-fast trigonometric computation, at least for gajillions web pages out there.

Since more and more people are accessing the web through mobile platforms these days, I decided to test several popular tablets out there and summarize the result in one graph below (updated):

For the detailed comparisons, check out the complete Dromaeo numbers of all tablets (left-to-right: Galaxy Tab, iPad 2, Playbook, TouchPad). If you find the above result is different that what you test yourself, shout out. I want to be careful not to propagate any discrepancies or misleading results. As usual, take the above beautiful collection of colored bars with a pinch of salt.

Samsung Galaxy Tab 10.1 is powered by Android 3.1 (Honeycomb) Build HMJ37, iPad 2 is using iOS 4.3.3, RIM Playbook's firmware is 1.0.7.2670, which the HP TouchPad has webOS 3.0. The choice of the devices represent a variety of fresh ARM-based tablet operating systems in the market as of this writing.

With Qt coming closer and closer to the become a good companion of the green robot, I wonder how would QtWebKit compete with those numbers. I think we will find out the answer in a couple of months, maybe even sooner.

Wednesday, July 06, 2011

fluid animation with accelerated composition

Those who work on web-based applications on mobile platforms often recall the advice, "Use translate3d to make sure it's hardware accelerated". This advice seems magical at first, and I seldom find anyone who explains (or wants to explain) the actual machinery behind such a practical tip.

For a while, Safari (and Mobile Safari) was the only WebKit-based browser which supports hardware-accelerated CSS animation. Google Chrome caught up, QtWebKit-powered browser (like the one in Nokia N9) also finally supported it. Such a situation often gave the wrong impression that Apple kept the hardware-acceleration code for themselves.

The above two are basically the reasons for this blog post.

In case you miss it (before we dive in further), please read what I wrote before about different WebKit ports (to get the idea of implementation + back-end approach) and tiled backing store (decoupling web page complexity with smooth UX). The GraphicsContext abstraction will be specially useful in this topic. In particular, because animation is tightly related to efficient graphics.

Imagine if you have to move an image (of a unicorn, for example) from one position to another. The pseudo-code for doing it would be:

for pos = startPosition to endPosition

draw unicorn at pos

To ensure smooth 60fps, your inner loop has only 16 ms to draw that unicorn image. Usually this is a piece of cake because all the CPU does is sending the pixels of the unicorn image once to the GPU (in the form of texture) and then just refer the texture inside the animation loop. No heavy work is needed on the CPU and GPU sides.

If, however, what you draw is very complicated, e.g. formatted text consisting of different font typefaces and sizes, this gets hairy. The "draw" part can take more than 16 ms and the animation is not butter-smooth anymore. Because your text does not really change during the animation, only the position changes, the usual trick is to cache the text, i.e. draw it onto a buffer and just move around the buffer as needed. Again, the CPU just needs to push the buffer the GPU once:

prepare a temporary buffer

draw the text onto the buffer

for pos = startPosition and endPosition

set a new transformation matrix for the buffer

As you can imagine, that's exactly what happens when WebKit performs CSS animation. Instead of drawing your div (or whatever you animate) multiple times in different position, it prepares a layer and redirect the drawing there. After that, animation is a simple matter of manipulating the layer, e.g. moving it around. WebKit term for this (useful if you comb the source code) is accelerated composition accelerated compositing.

Side note: Mozilla has the same concept, available since Firefox 4, called Layer.

If you understand immediate vs retain mode rendering, non-composited vs composited is just like that. The idea to treat the render tree more like a scene graph, a stack of layers with different depth value.

Because composition reduces the computation burden (GPU can handle varying transformation matrix efficiently), the animation is smoother. This is not so noticeable if you have a modern machine. In the following video demo (http://youtu.be/KujWTTRkPkM), I have to use my slightly old Windows laptop to demonstrate the frames/second differences:

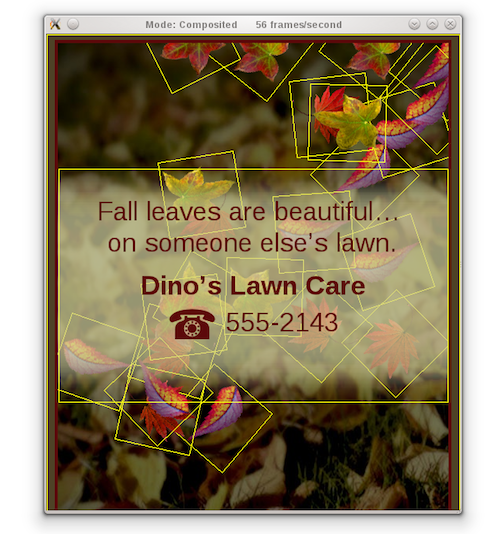

The excellent falling leaves animation is something you have seen before, back when WebKit support for CSS animation was announced.

Accelerated composition does not magically turn every WebKit ports capable of doing fluid animation. Analog to my previous rounded corner example, composition requires the support from the underlying platform. On Mac OS X port of WebKit, composition is mapped into CoreAnimation (part of CoreGraphics), the official API to have animated user interface. Same goes for iOS WebKit. On Chromium, it is hooked into sandboxed GPU process.

With QtWebKit, composition is achieved via Graphics View framework (read Noam's explanation for details). The previous video you have seen was created with QtWebKit, running without and with composition, i.e. QGraphicsWebView with different AcceleratedCompositingEnabled run-time setting. If you want to check out the code and try it yourself, head to the usual X2 repository and look under webkit/composition. Use spacebar (or mouse click) to switch between composited and non-composited mode. If there is no significant frame rate improvement, increase NUMBER_OF_LEAVES in leaves.js and rebuild. When compositing is active, press D to draw thin yellow border around each layer. Since it's all about Graphics View, this debugging is easy to implement. I just inject a custom BorderEffect, based on QGraphicsEffect (which I did prototype back when I was with Nokia):

Thus, there is nothing like hidden secret with respect to Safari hardware-accelerated CSS support. In fact, Safari is not different than other Mac apps. If you compile WebKit yourself and build an application with it, you would definitely get the animation with hardware acceleration support.

As the bonus, since Mac and iOS WebKit delegate the animation to CoreAnimation (CA), you can use various CA tweaks to debug it. CA_COLOR_OPAQUE=1 will emphasize each layer with red color overlay (as in the demo). While this applies to any CA-based apps (not limited to WebKit or Safari), it's still very useful nevertheless. Chromium's similar feature is --show-composited-layer-border command line option.

How does WebKit determine what to composited? Since the goal is to fully take advantage of the GPU, there are few particular operations which are suitable for such a composition. Among others are transparency (opacity < 1.0) and transformation matrix. Ideally we would just use composition for the entire web page. However, composition implies a higher memory allocation and a quite capable graphics processor. On mobile platforms, these two translate into additional critical factor: power consumption. Thus, one just needs to draw a line somewhere and stick with it. Hence, that's why currently (on iOS) translate3d and scale3d are using composition and their 2-D counterparts are not. Addendum: on the desktop WebKit, all transformed element is accelerated, regardless whether it's 2-D or 3-D.

If you make it this far, here are few final twists.

First of all, just like the tiled backing store approach I explained before, accelerated composition does not force you to use the graphics processor for everything. For efficiency, your layer (backing store) might be mapped to GPU textures. However, you are not obligated to prepare the layer, i.e. drawing onto it, using the GPU. As an example, you can use a software rasterizer to draw to a buffer which will be mapped to OpenGL texture.

In fact, a further variation of this would be not to use the GPU at all. This may come as a surprise to you but Android 2.2 (Froyo) added composition support (see the commit), albeit doing everything with in software (via its Skia graphics engine). The advantage is of course not that great (compared to using OpenGL ES entirely), however the improvement is really obvious. If you have two Android phones (of the same hardware specification), one still running the outdated 2.1 (Eclair) and the other with Froyo, just open the Falling Leaves demo and watch the frame rate difference.

With the non-GPU composition-based CSS animation in Froyo, translate3d and other similar tricks do not speed-up anything significantly. In fact, it may haunt you with bugs. For example, placing form elements in a div could wreck the touch events accuracy, mainly because the hit test procedures forget to take into account that the composited layer has moved. Things which seem to work just fine Eclair may start behaving weird under Froyo and Gingerbread. If that happens to you, check your CSS properties.

Fortunately (or unfortunately, depending on your point of view), Android madness with accelerated composition is getting better with Honeycomb and further upcoming releases. Meanwhile, just take it for granted that your magical translate3d spell has no effect on the green robots.

Last but not least, I'm pretty excited with the lightweight scene graph direction in the upcoming Qt 5. If any, this will become a better match for QtWebKit accelerated composition compared to the current Graphics View solution. This would totally destroy the myth (or misconception) that only native apps can take advantage of OpenGL (ES). Thus, if you decide to use web technologies via QtWebKit (possibly through the hybrid approach), your investment would be future-attractive!

Sunday, July 03, 2011

birds of paradise

PhantomJS, the headless QtWebKit tool, is now listed as one of the Qt Ambassador show cases. There is also a growing list of projects using PhantomJS (let me know if you want to be listed). In fact, PhantomJS running in several Amazon EC2 instances is used as the primary tool in a web security analysis.

Few days ago, right during the summer solstice, I tagged and released PhantomJS 1.2, codenamed Birds of Paradise. There are some exciting changes there that I will briefly outline as follows (for details, see the Release Notes).

Most important change is the fix to the security model. Your PhantomJS script now does not run in the context of the loaded QWebPage anymore (more precisely, the main frame thereof). Rather, we have a new WebPage object that abstracts (surprise) the web page. This forces a major breakage in the API since there is no way to support 1.1 style of API with the same code. Again, check the Release Notes to find out how to migrate your script.

The bonus with the above WebPage object abstraction is a bunch of external callbacks we can set up, most notably onLoadStarted and onLoadFinished, useful to trigger some actions upon page loading. Speaking of JavaScript evaluation, dynamic script tag loading is a quite popular trick to asynchronously load external libraries, e.g. those part of Google Libraries API. Rather than writing your own code, there is now easy-to-use includeJS() function for that specific purpose.

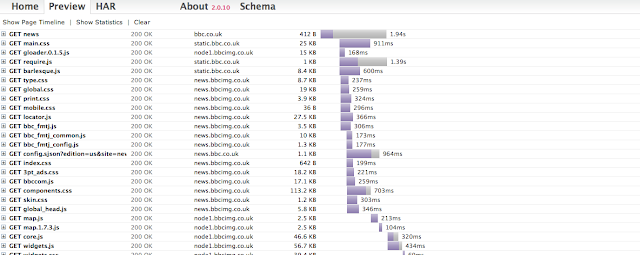

My personal #1 favorite feature is the simple network traffic analysis. I already demonstrated the technique before, i.e. by subclassing QNetworkAccessManager and recording the major network activities (see my previous blog post discussing this in details). The new PhantomJS example script netsniff.js shows the use of this feature: it logs network requests and responses and dumps them in HTTP Archive (HAR) format. You can then use online HAR viewer to see the waterfall diagram, or post-process the data the way you like it. For example, here is what BBC News web site produced:

Since the entire traffic capture can be fully automated, you can have it checked against various rules. For example, you may want to ensure that KDE.org does not get significantly slower (in terms of page loading) every time someone changes the web site design. Perhaps you want to compare the resource loading with gnome.org just to confirm that it loads faster. Gathering the stats of the same site from different geographical locations might also reveal how the web page is perceived by some fans on the other side of the planet.

The use of mobile devices for consuming information is exploding. It would be fun to see PhantomJS leveraged to get the metrics behind the data traffic. I haven't bothered yet to port and test PhantomJS on Qt-based Harmattan-powered phone like the new shiny Nokia N9. Of course, if you a spare one, feel free to Fedex me :)

Thursday, June 30, 2011

quaternion multiplication: two years later

Sometime back I wrote (fun fact: it's Google first hit for faster quaternion multiplication) about my favorite commit I did exactly two years ago to Qt :

git show cbc22908 commit cbc229081a9df67a577b4bea61ad6aac52d470cb Author: Ariya Hidayat Date: Tue Jun 30 11:18:03 2009 +0200 Faster quaternion multiplications. Use the known factorization trick to speed-up quaternion multiplication. Now we need only 9 floating-point multiplications, instead of 16 (but at the cost of extra additions and subtractions).

Ages ago, during my Ph.D research, when I worked with a certain hardware platform (hint: it's not generalized CPU), minimizing the needed number of hardware multipliers with a very little impact in the computation speed makes a huge different. With today's advanced processor architecture armed with vectorized instructions and a really smart optimizing compiler, there is often no need to use the factorized version of the multiplication.

Side note: if you want to like quaternion, see this simple rotatation quiz which can be solved quite easily once you know quaternion.

I try to apply the same trick to PhiloGL, an excellent WebGL framework from Nicolas. Recently, to my delight, he added quaternion support to the accompanying math library in PhiloGL. I think this is a nice chance to try the old trick, as I had the expectation that reducing the number of multiplications from 16 to just 9 could give some slight performance advantage.

It turns out that it is not the case, at least based on the benchmark tests running on modern browsers with very capable JavaScript engine. You can try the test yourself at jsperf.com/quaternion-multiplication. I have no idea whether this is due to JSPerf (very unlikely) or it's simply because the longer construct of the factorized version does not really speed-up anything. If any, seems that the amount of executed instruction matters more than whether addition is much faster than multiplication. And of course, we're talking about modern CPU, the difference is then becoming more subtle.

With the help of Nicolas, I tried various other tricks to help the JavaScript engine, mainly around different ways to prepare the persistent temporary variables: using normal properties, using Array, using Float32Array (at the cost of precision). Nothing leads to any significant improvement.

Of course if you have other tricks in your sleeve, I welcome you to try it with the benchmark. Meanwhile, let's hope that someday some JavaScript engine will run the factorized version faster. It's just a much cooler way to multiply quaternions!

Monday, June 27, 2011

progressive rendering via tiled backing store

Imagine you have to create a CAD-grade application, e.g. drawing the entire wireframe of a space shuttle or showing the intricacies of 9-layer printed circuit board. Basically something that involves a heavy work to display the result on the screen. On top of that, the application is still expected to perform smoothly in case the user wants to pan/scroll around and zoom in/out.

The usual known trick to achieve this is by employing a backing store, i.e. off-screen buffer that serves as the target for the drawing operations. The user interface then takes the backing store and displays it to the user. Now panning is a matter of translation and zooming is just scaling. The backing store can be updated asynchronously, thus making the user interaction decoupled from the complexity of the rendering.

Moving to a higher ninja level, the backing store can be tiled. Instead of just one giant snapshot of the rendering output, it is broken down to small tiles, say 128x128 pixels. The nice thing is because each tile can be mapped as a texture in the GPU, e.g. via glTexImage2D. Drawing each textured tile is also a (tasty) piece of cake, GL_QUAD with glBindTexture.

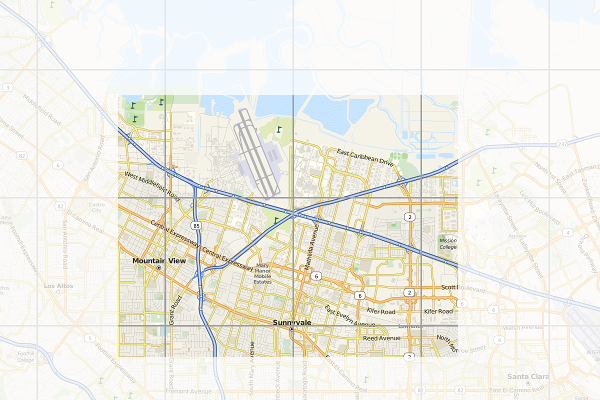

Another common use-case for tiling is for online maps. You probably use it every day without realizing it in Google Maps, OpenStreetMap, or other similar services. In this case, the reason is to use tiles is mainly to ease the network aspect. Instead of sending a huge image representing the area seen by the user in the viewport, actually lots of small images are transported and stitched together by the client code in the web browser.

Here is an illustration of the concept. The border of each tile is emphasized. The faded area is what you don't see (outside the viewport). Of course every time you pan and zoom, new fresh tiles are fetched so as to cover the viewport as much as possible.

When I started to use the first generation iPhone years ago, I realized that the browser (or rather, its WebKit implementation) uses the very similar trick. Instead of drawing the web page straight to the screen, it uses a tiled backing store. Zooming (via pinching) becomes really cheap, it's a matter of scaling up and down. Flicking is the same case, translating textures does not bother any mobile GPU that much.

Every iOS users know that if you manage to beat the browser and flick fast enough, it tries to catch up and fills the screen as fast as possible but every now and then you'll see some sort of checkerboard pattern. That is actually the placeholder for tiles which are not ready yet.

Since all the geeks out there likely better understand the technique with a piece of code, I'll not waste more paragraphs and present you this week's X2 example: full-featured implementation of tiled backing store in < 500 lines of Qt and C++. You can get the code from the usual X2 git repository, look under graphics/backingstore. When you compile and launch it, use mouse dragging to pan around and mouse wheel to zoom in/out. For the impatient, see the following 50-second screencast (or watch directly on YouTube):

For this particular trick, what you render actually does not matter much (it could be anything). To simplify the code, I do not use WebKit and instead just focus on SVG rendering, in particular of that famous Tiger head. The code should be pretty self-explanatory, especially for the TextureBuffer class, but here is some random note for your pleasure.

At the beginning, every tile is invalid (=0). Every time the program needs to draw a tile, it checks first if the tile is valid or not. If yes, it substitutes it with the checkerboard pattern instead (also called the default texture) and triggers an asynchronous update process. During the update, the program looks for the most important tile which needs to be updated (usually the one closes to the viewport center). What is a tile update? It's the actual rendering of the SVG, clipped exactly to the rectangular bounding box represented by the tile, into a texture.

To show the mix-n-match, I actually use Qt built-in software rasterizer to draw the SVG. That demonstrates that, even though each tile is essentially an OpenGL texture, you are not forced to use OpenGL to prepare the tile itself. This is essentially mixing rasterization done by the CPU with the texture management handled by the GPU.

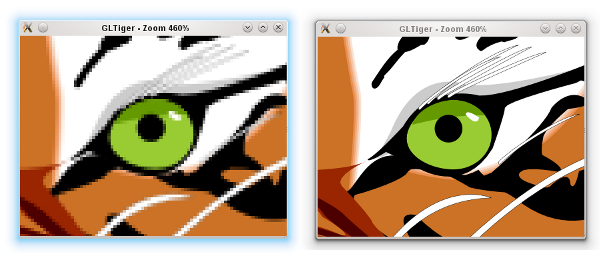

As I mentioned before, panning is a matter of adjusting the translation offset. Zooming is tricky, it involves scaling up (or down) the textures appropriately. At the same time, it also triggers an asynchronous refresh. The refresh function is nothing but to reset all the tiles to invalid again, which in turns would update each one by one. This gives the following effect (illustrated in the screenshot below). If suddenly you zoom in, you would see pixelated rendering (left). After a certain refresh delay, the tile update makes the rendering crisp again (right).

Because we still need to have the outdated tiles scaled up/down (those pixelated ones), we have to keep them around for a while until the refresh process is completed. This is why there is another texture buffer called the secondary background buffer. Rest assured, when none of the tiles in the background buffer is needed anymore, the buffer is flushed.

If you really want to follow the update and refresh, try to uncomment the debug compiler define. Beside showing the individual tiles better, that flag would also intentionally slows down both update and refresh so your eyes can have more time to trace them.

BTW how would you determine the tile dimension in pixels? Unfortunately this can vary from one hardware to another. Ideally it's not too small because you'd enjoy the penalty of logical overdraw. If it's too large, you might not be progressive enough. Trial and error, that can be your enlightenment process.

Being an example, this program has a lot of simplifications. First of all, usually you want the tile update to take place in a separate thread, and probably updating few tiles at once. With a proper thread affinity, this helps improving the overall perceptive smoothness. Also, in case you know upfront that it does not impact the performance that much, using texture filtering (instead of just GL_NEAREST) for the scaling would give a better zooming illusion.

You might also see that I decided not to use the tile cache approach in the texture buffer. This is again done for simplicity. The continuous pruning of unused textures ensures that we actually don't grow the textures and kill the GPU. If you really insist on the absolutely minimal amount of overdraw and texture usage, then go for a slightly complicated cache system.

Since I'm lazy, the example is using Open GL and quad drawing. If you want to run it on a mobile platform, you have patch it so that it works with Open GL ES. In all cases, converting it to use vertex and texture arrays is likely a wise initial step. While you are there, hook the touch events so you can also do the pinch-to-zoom effect.

If you are brave enough, here is another nice final finish (as suggested by Nicolas of InfoVis and PhiloGL fame). When you zoom in, the tiles in the center are prioritized. However, when you zoom out, the tiles nearby the viewport border should get the first priority, in order to fill the viewport as fast as possible.

Progressive rendering via a tiled backing store is the easiest way to take advantage of graphics processor. It's of course just one form, probably the simplest one, of hardware acceleration.

Friday, June 10, 2011

your webkit port is special (just like every other port)

One of the most often question I got, "Since browser Foo and browser Bar are using the same WebKit engine, why do I get different feature set?".

Let's step aside a bit. Boeing 747, a very popular airliner, uses Pratt & Whitney JT9D engine. So does Airbus A310. Do you expect both planes to have the same flight characteristics? Surely not, there are other bazillion factors which decide how that big piece of metal actually flies. In fact, you would not expect A310-certified pilot to just jump into 747 cockpit and land it.

(Aviation fans, please forgive me if the above analogy is an oversimplification).

WebKit, as a web rendering engine, is designed to use a lot of (semi)abstract interfaces. These interfaces obviously require (surprise) some implementation. Example of such interfaces are network stack, mouse + key handling, thread system, disk access, memory management, graphics pipeline, etc.

What is the popular reference to WebKit is usually Apple's own flavor of WebKit which runs on Mac OS X (the first and the original WebKit library). As you can guess, the various interfaces are implemented using different native libraries on Mac OS X, mostly centered around CoreFoundation. For example, if you specify a flat colored button with specific border radius, well WebKit knows where and how to draw that button. However, the final actual responsibility of drawing the button (as pixels on the user's monitor) falls into CoreGraphics.

With time, WebKit was "ported" into different platform, both desktop and mobile. Such flavor is often called "WebKit port". For Safari Windows, Apple themselves also ported WebKit to run on Windows, using the Windows version of its (limited implementation of) CoreFoundation library.

Beside that, there were many other "ports" as well (see the full list). Via its Chrome browser (and the Chromium sister project), Google has created and continues to maintain its Chromium port. There is also WebKitGtk which is based on Gtk+. Nokia (through Trolltech, which it acquired) maintains the Qt port of WebKit, popular as its QtWebKit module.

(This explains why any beginner scream like "Help! I can't build WebKit on platform FooBar" would likely get an instant reply "Which port are you trying to build?").

Consider QtWebKit, it's even possible (through customized QNetworkAccessManager, thanks to Qt network modularity) to hook a different network backend. This is for example what is being done for KDEWebKit module so that it becomes the Qt port of WebKit which actually uses KDE libraries to access the network.

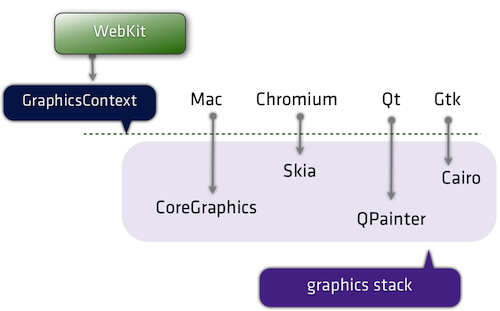

If we come back to the rounded button example, again the real drawing is carried out in the actual graphics library used by the said WebKit port. Here is a simplified diagram that shows the mapping:

GraphicsContext is the interface. All other code inside WebKit will not "speak" directly to e.g. CoreGraphics on Mac. In the above rounded button example, it will call GraphicsContext's fillRoundedRect() function.

There are various implementation of GraphicsContext, depending on the port. For Qt, you can see how it is done in GraphicsContextQt.cpp file.

Should you know a little bit about graphics, you would realize that there are different methods and algorithms to rasterize a filled rounded rectangle. A certain approach is to convert it to a fill polygon, another one is to scanline-convert the rounded corner directly. A fully GPU-based system may prefer working with tessellated triangle strips, or even with shader. Even the antialiasing level defines the outcome, too.

In short, different graphic stacks with different algorithm may not produce the same result down to the exact pixel colors. It all depends various factors, including the complexity of the drawing itself.

Now the same concept applies to other interfaces. For example, there is no HTTP stack inside WebKit code base. All network-aware code calls specific function to get resources off the server, post some data, etc. However, the actual implementation is in system libraries. Thus, don't bother trying to find SSL code inside WebKit.

This gets us to this question, "If browser X is using WebKit, why it does not have feature Z?". You may be able to deduce the reason. Imagine a certain graphic stack which glues GraphicsContext for that platform does not implement fillRoundedRect() function, what would happen? Yes, your rounded button suddenly becomes a square button.

As a matter of fact, when someone ports WebKit to a new platform, she will need to implement all these interfaces one by one. Until it is complete, of course not everything would work 100% and most likely only the basics are there. That should feel like putting a jet engine into an airframe that can't fly yet.

"Can we have one de-facto graphics stack that powers WebKit so we always have pixel-perfect rendering expectation?" Technically yes, but practically no. In fact, while Boeing and Airbus may buy the same engine from Pratt & Whitney, they may not want to have the exact same landing gears. Everyone of us wants to be special. A certain system wants to use OpenGL ES, squeeze the best performance out of it and doesn't really care if the selling price goes up. Others want to sacrifice the speed, trim the silicon floor and make the device more affordable. More often, you just have to live with diversity.

And if you want to put aside all the differences, two WebKit ports of the same revision share tons of stuff, especially if they use the same JavaScript engine. They will parse HTML and CSS in the same way, produce the same DOM, yield the same render tree, have the same JavaScript host objects, and so on.

Thus, next time someone shouts "there is no two exact WebKit", you know the story behind it.

Monday, June 06, 2011

rectangular gradient

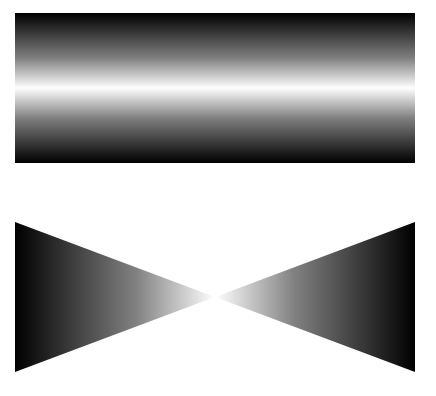

Thorsten Zachmann, from Calligra (and previously KOffice) fame, asked me once on how to draw a different kind of gradient: a rectangular one. While Qt itself has built-in supports for linear, radial, and conical gradient types, apparently for office apps we may need more than that. In short, the goal is creating the following:

It turns out that this is not so difficult at all, about 50 lines of code. Check it out at the usual X2 repository and find it under graphics/rectgradient.

Basically it boils down to a two-step process, as illustrated below. The first one is easy, just create a linear gradient from the center going north and south. The second one is similar, but now we are going east and west and clip it to two triangles. Once we combined both, we get the rectangular gradient.

Have fun with the gradient!

Tuesday, May 31, 2011

on the story of browser names

One of the early graphical web browser that got really popular was Mosaic, developed at National Center for Supercomputing Applications (NCSA). Some folks from the team, along with SGI founder Jim Clark, decided that it's worth a venture and formed a company, originally called Mosaic Communication and then later renamed to Netscape Communication.

Netscape's flagship desktop product was a much more advanced web browser than Mosaic. Jamie Zawinski coined the name "Mozilla", as it was supposed to be Mosaic Killer (Mozilla = Mosaic + Godzilla). At the later stage, the final browser was widely known as Netscape Navigator. For browsing the web, obviously you need a navigator.

Parallel to that, another company called Spyglass licensed the technology from NCSA and produced a web browser, Spyglass Mosaic. It is fun to see the same theme here (probably even slightly coincidental), typical Hollywood movies always portray the naval ship's navigator using his spyglass for some sort of observations.

Then came along Microsoft. It licensed Spyglass Mosaic, called it Internet Explorer, and distributed with Windows. The browser war has just started. I mean of course, the name war. Why would you stop at navigating (and using spyglass) if you can continue exploring?

On the other side of the planet, KDE slowly emerged as the attractive supplement to the otherwise boring Unix desktop. Internet technologies became the centerpiece of the early version of KDE, thus its developers grew a set of applications from e-mail program, newsgroup reader, IRC client, and (surprise) a web browser. There was no free-software-friendly modern and capable web rendering engine back then, thus a bunch of brave young hackers initiated the adventure of (re)writing one, under the name KHTML (which was itself a replacement of the original attempt, khtmlw).

From this KDE camp, the ultimate web browser (which actually could serve other tasks as well, e.g. file manager and document viewer) was popular as Konqueror (indeed, those were the days where KDE stuff was named K-this or K-that). History showed how Age of Discovery was not about navigation and exploration only. After all, who would not want to repeat the glory of "I came, I saw, I conquered"?

When Apple decided that it must give the best browsing experience for Mac (and could not just rely on Microsoft for its Internet Explorer), they took KHTML, ported it to Mac, improved it, and later released it as an open-source project called WebKit. Apple's proprietary web browser, which is powered by WebKit (till today), was announced by Steve Jobs as Safari. Already conquered a land? Might as well enjoy it with a little bit of safari and collect exotic pictures. Shall we?

Just like in your favorite comic books, the world is however multiverse. Netscape lost the browser war, Mozilla became an open-source project and its Firefox browser (formerly Firebird, and formerly Phoenix) remains as the icon of freedom, independence, and community. Opera, originally a Telenor research project, was something that came all the way from Norway, has loyal followers and remains dominant in the embedded space. Google even joined the fun and launched WebKit-based Chrome (and Chromium). All these three are excellent web browsers, they just don't have the names which fit the story of navigation, exploration, and so on.

As the closing, here is a side twist. In its early WebKit days, how did Apple engineers name the code branch of its ported Konqueror's KHTML? Alexander.

Sunday, May 29, 2011

meego conf 2011 impressions

It's a mixed feeling. Personally it was (always) fun to meet my former coworkers from Qt, Nokia and other KDE folks and catch up and exchange tech gossips. I am also excited to get to know MeeGo team from Intel side as well. The conference itself is professionally organized. The Hacker Lounge idea is perfect, a basement to hang out 24 hours with free cold drinks, lots of games (from fussball to pingpong), superfast and reliable WiFi, and of course a bunch of comfy couches. Hyatt Regency itself proves to be a really really nice venue from such a developer conference.

The three-day program was packed with tons of sessions, anything from Qt 5, Wayland, Scene Graph, Media and IVI, and various other BoFs. Lots of exciting new technologies coming to the next version of MeeGo! Oh BTW, the releases will be every 6 months, expect MeeGo 1.3 in October 2011, along with its experimental Wayland support.

From the device-give-away perspective, Intel threw a lot of ExoPC tablets (flashed with MeeGo 1.2 preview), as part of its AppUp program. I got one for a while, it's really easy to port your existing Qt apps using its SDK (but that's a separate blog post).

But that's about it. LG was supposed to showcase its MeeGo-based LG GW990 (based on Intel Moorestown). There was even rumor that LG has also a tablet product, instead of only just a smartphone. And of course Nokia has its own N9 phone in the pipeline. None of this happened.

When I remember back at Maemo Summit 2009 in Amsterdam, there was an accelerated momentum just because Nokia gave N900 to everyone. It was a top-of-the-line phone at that time, I still even use it for various demos. Back then I was still with Nokia and after all these years, it's not funny to see that everyone is still using it. It's fine and dandy to have millions of cars using MeeGo for its infotainment, smart TVs based on MeeGo, and so on. A refresh in this smartphone party however would have made a much more dramatic impact with respect to the momentum in the development community.

Seems I still need to wait until I can use a MeeGo phone as my primary phone. Meanwhile, I'll stick with ExoPC tablet to learn various bits of MeeGo. And hopefully nothing would exhaust my patience.

Sunday, May 22, 2011

meego conf 2011

It's MeeGo time! 2011 conference will be held in Hyatt Regency, San Francisco.

The complete program has been published. For topics related to Qt (among others), check what Thiago listed. In particular, of course yours truly be there, talking about Hybrid Apps (Native + Web) using WebKit.

If you will be around, see you there!

Thursday, April 28, 2011

tango papa echo, charlie golf kilo

Currently stranded at TPE. Few minutes before leaving SFO I managed to tag 1.1.0 release for PhantomJS (lesson learned: never attempt a release few hours before boarding). Thanks to Ivan and Alessandro, few source tarballs and binaries are now ready.

It's been hectic days. 2011 WebKit Contributors Meeting was just over, it was fantastic and I got to meet and talk to a lot of WebKit rockstars. Parallel to that, my fellow brave Sencha lads finally unleashed The Quattro!

Next stop, also the final destination: CGK. Bracing for the impact of reverse culture shock...

Saturday, April 23, 2011

camp kde 2011

Camp KDE 2011 was a fabulous experience. First of all, there is definitely something with me and Camp KDE. The previous one was in San Diego and at that time I was there working for Qualcomm. This year it is San Francisco, right after I moved to the Bay Area.

Videos of the sessions have been published. I contributed two talks there, one is about the (usual) graphics stuff with Qt (video, slides). The other one is a series of demos of web technologies (video, slides).

There are some interview videos as well. If you watch Wade and me, you can spot a little guy there. Pay attention to his shirt :)

The venue can't be better, it's in the center of Japantown. Even better, the weekend after is the Cherry Blossom Festival.

Since I don't live in a big city anymore, it's also fascinating to be able to spot a cozy cafe right between all the big buildings. Right in Japantown, we found YakiniQ which serves a must-try sweet potato latte!

All in all, it was a blast for me. Beside catching up with the people I met before, finally I got to meet folks I only know through our online interaction.

Kudos to the Camp KDE organizers! See you in 2012.

Saturday, March 19, 2011

camp kde in 2 weeks

My first Camp KDE experience was when it was held in San Diego, which was also interesting since at that time, I just moved there.

Just like the planets align themselves, apparent my move to the Bay Area is matched with this year Camp KDE in San Francisco. This time, it is co-located with Linux Foundation Collaboration Summit. For more info, just check the official site: camp.kde.org.

The schedule for the tracks has been finalized, there will be various talks around KDE on Mobile, PIM, and of course Qt. I myself will have two presentations: Introduction to QtWebKit and Efficient Graphics with Qt: Beautiful and Blazing Fast.

See you there!

Saturday, March 12, 2011

SenchaCon 2010

At the last successful, sold-out Sencha Conference 2010, I did two introductory talks about JavaScript and WebKit, mainly targeted for web application developers. Since a few weeks ago, the videos for these talks have been available for you to watch.

JavaScript Engines: Under the Hood is 10,000-foot overview on how a typical JavaScript engine works. Watch it below or at vimeo.com/18783283, with the accompanying slides at http://slidesha.re/gGx9aA.

The other talk Compiling and Optimizing Your Own Browser with WebKit (vimeo.com/18780399 for the video, http://slidesha.re/fPSvXX for the slide deck), mostly showing few tricks you can leverage to understand how your web applications work. For example, by using QtWebKit and capturing all the drawing commands and the corresponding timestamp, it is very easy to have a slow-motion rendering of your web page. As I showed it in the talk, it is even possible to go back in time, i.e. rendering your web page backwards.

Many other videos from SenchaCon 2010 have been published as well. Make sure you check them out.

Enjoy!

Friday, March 11, 2011

PhantomJS meets CoffeeScript

I did something related to CoffeeScript before, namely simple command-line compiler. For those who are not aware of CoffeeScript, it's basically (from Wikipedia) JavaScript with "syntactic sugar inspired by Ruby and Python".

On the hand, surprisingly PhantomJS generates more interest than I ever imagined before (with over 240 watching its repository), prompting me not to abandon it too soon :) In fact, I decided to dump my tought on its roadmap since more and more people are willing to help.

My secret feature, which is not secret anymore, for the upcoming 1.1 release is to incorporate the CoffeeScript compiler so that PhantomJS scripts can be written in CoffeeScript. Since the intention of PhantomJS is for various scripting purpose utilizing headless QtWebKit, this is a perfect match.

Gone are the curly braces...

Tuesday, February 22, 2011

vim: fast file navigation with Command-T

Judging from the hits, my blog post on lightning-fast project navigation in vim seems to be still popular. While the Project script is still my favorite these days, especially when dealing with hundreds of files, let me show you here another gem: Command-T script.

Similar to Command-T in TextMate, basically this Command-T script allows quick and incremental search for files. This works very well. The official site for Command-T has several good screencasts which demonstrate how to install and set it up.

Manual installation is fairly simple. In fact, if you use Janus (which what I strongly do recommend these days for vim lovers), you are already set.

Command-T's documentation is quite extensive, make sure you read it. For the impatient, here are three important tidbits.

(1) Command-T requires vim with Ruby support. One way to find this is:

vim --version | grep '\+ruby'

(2) The default binding is Leader-t. For MacVim (or other GUI-based vim on Mac) and you'd like to have it on your Command-t (or D-T, in vim's terminology), just insert the following in your .vimrc:

if has("gui_macvim")

macmenu &File.New\ Tab key=

map :CommandT

endif

(3) If you like to open the selected file in a new tab, hit Ctrl+T instead of just Enter.

One side note: if you have MacVim but don't have the mvim shortcut, add this to your .profile (or .bash_profile):

alias mvim='/Applications/MacVim.app/Contents/MacOS/Vim -g'

This way, you can launch MacVim from terminal, e.g. mvim code.js.

And in case you still want to use TextMate, why don't you check out TextMate2 instead?

Monday, February 21, 2011

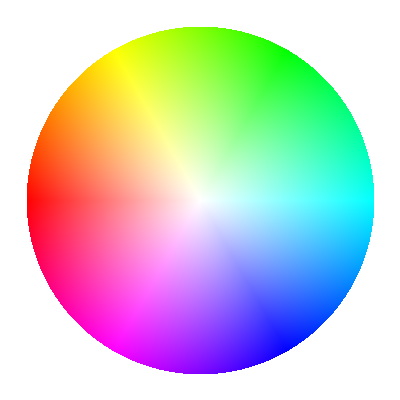

color wheel on Canvas

While I played with HSV pie and color wheel before, usually I just use the excellent Qt graphics stack to try out various things. This days, I lean towards using web technologies and for that purpose, HTML Canvas suits me just fine. With PhantomJS, I even got the result rendered as PNG image.

The new example I added to PhantomJS is colorwheel.js which produces the above screenshot. The entire script code is as follows (if some parts look cryptic, read about HSL and HSV color space):

if (phantom.state.length === 0) {

phantom.state = 1;

phantom.viewportSize = { width: 400, height : 400 };

phantom.content = '<html><body><canvas id="surface">' +

'</canvas></body></html>';

} else {

var el = document.getElementById('surface'),

context = el.getContext('2d'),

width = window.innerWidth,

height = window.innerHeight,

cx = width / 2,

cy = height / 2,

radius = width / 2.3,

imageData,

pixels,

hue, sat, value,

i = 0, x, y, rx, ry, d,

f, g, p, u, v, w, rgb;

el.width = width;

el.height = height;

imageData = context.createImageData(width, height);

pixels = imageData.data;

for (y = 0; y < height; y = y + 1) {

for (x = 0; x < width; x = x + 1, i = i + 4) {

rx = x - cx;

ry = y - cy;

d = rx * rx + ry * ry;

if (d < radius * radius) {

hue = 6 * (Math.atan2(ry, rx) + Math.PI) / (2 * Math.PI);

sat = Math.sqrt(d) / radius;

g = Math.floor(hue);

f = hue - g;

u = 255 * (1 - sat);

v = 255 * (1 - sat * f);

w = 255 * (1 - sat * (1 - f));

pixels[i] = [255, v, u, u, w, 255, 255][g];

pixels[i + 1] = [w, 255, 255, v, u, u, w][g];

pixels[i + 2] = [u, u, w, 255, 255, v, u][g];

pixels[i + 3] = 255;

}

}

}

context.putImageData(imageData, 0, 0);

document.body.style.backgroundColor = 'white';

document.body.style.margin = '0px';

phantom.render('colorwheel.png');

phantom.exit();

}

Beside the above example, there are few other things which you'll get in the upcoming PhantomJS 1.1 release, among others support for Unix shebang, file upload for form submission, disable/enable images loading and plugins, as well as support for QUnit integration.

Sunday, January 23, 2011

PhantomJS: minimalistic headless WebKit-based JavaScript-driven tool

PhantomJS is a headless WebKit packaged as a JavaScript-driven tool. It can be used in command-line utilities which requires web stack, or even as the basis for testing rich web application. It uses WebKit in a headless mode, so you get access to the real native and fast implementation (not a simulated environment) of various standards such as DOM, CSS selector, Canvas, SVG, and many others.

The project page contains a bunch of examples, from easy ones to some more complicated uses. Feel free to contribute more examples!

Let's look at one of the examples, the page rasterizer (yes, it's only 16 lines!):

if (phantom.state.length === 0) {

if (phantom.args.length !== 2) {

console.log('Usage: rasterize.js URL filename');

phantom.exit();

} else {

var address = phantom.args[0];

phantom.state = 'rasterize';

phantom.viewportSize = { width: 600, height: 600 };

phantom.open(address);

}

} else {

var output = phantom.args[1];

phantom.sleep(200);

phantom.render(output);

phantom.exit();

}

If I want to have the famous PostScript tiger from its SVG source, all I have to do is to run:

phantomjs rasterize.js http://ariya.github.com/svg/tiger.svg tiger.png

But static vector graphic is boring. Replacing the above with

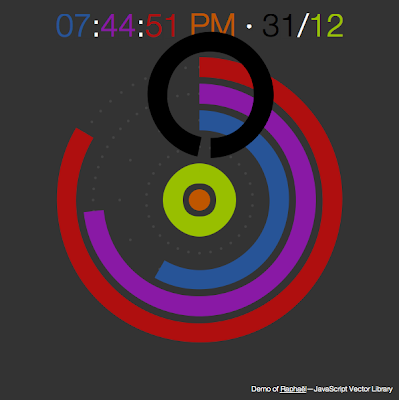

phantomjs rasterize.js http://raphaeljs.com/polar-clock.html clock.png

gives me Polar Clock, one notable example from RaphaelJS.

Should you need to deal with JSONP, process XML, and integrate with YQL, that's all easily done. Again, refer to the various service integration examples. Let me show one example, which is actually my favorite:

if (phantom.state.length === 0) {

var origin, dest;

if (phantom.args.length < 2) {

console.log('Usage: direction.js origin destination');

console.log('Example: direction.js "San Diego" "Palo Alto"');

phantom.exit(1);

}

origin = phantom.args[0];

dest = phantom.args[1];

phantom.state = origin + ' to ' + dest;

phantom.open(encodeURI('http://maps.googleapis.com/maps/api/directions/xml?origin='

+ origin + '&destination=' + dest +

'&units=imperial&mode=driving&sensor=false'));

} else {

if (phantom.loadStatus === 'fail') {

console.log('Unable to access network');

} else {

var steps;

steps = phantom.content.match(/<html_instructions>(.*)<\/html_instructions>/ig);

if (steps == null) {

console.log('No data available for ' + phantom.state);

} else {

steps.forEach(function (ins) {

ins = ins.replace(/\</ig, '<').replace(/\>/ig, '>');

ins = ins.replace(/\<div/ig, '\n<div');

ins = ins.replace(/<.*?>/g, '');

console.log(ins);

});

}

}

phantom.exit();

}

If I run it like the following:

phantomjs direction.js 'Redwood City' 'Sunnyvale'

what I got is the complete driving direction:

Head east on Broadway toward El Camino Real Take the 1st left onto El Camino Real Turn right at Whipple Ave Slight right to merge onto US-101 S toward San Jose Take exit 398B to merge onto CA-85 S toward Santa Cruz/Cupertino Take exit 22A to merge onto CA-82 S/E El Camino Real toward Sunnyvale Destination will be on the right Map data ©2011 Google

Make sure you check out other examples, such as getting weather forecast conditions, finding pizza in New York, looking up approximate location based on IP address, pulling the list of seasonal food, displaying tweets, and many others.

Headless execution of any web content also enables fast unit testing. Obviously, the goal is not to replace comprehensive, cross-browser framework such as Selenium or Squish for Web. Rather, it serves a quick sanity check just before you check in some changes.

Since this can happen automatically and does not need to launch any browser, even better, you can hook the test so that it executes right before a commit and actually prevents the commit if any of the test fails. It is easily done using git via its hook support. This is something I have written at Sencha blog. It demonstrated precommit hook with Jasmine, but technically it can work with any test framework.

I have been working on and off on PhantomJS for the past few years. You may be already familiar with some of its inspiration (also involving headless WebKit): SVG rasterizer, page capture, visual Google, etc. Finally I managed to overcome my laziness, cleaned up the code, and published it for your pleasure. Obviously it's not a surprise if you find out that PhantomJS uses QtWebKit.

I got a few tasks for next PhantomJS version 1.1. You are encouraged to file bugs and feature requests in the said issue tracker.

Get it while it is hot!

Saturday, January 08, 2011

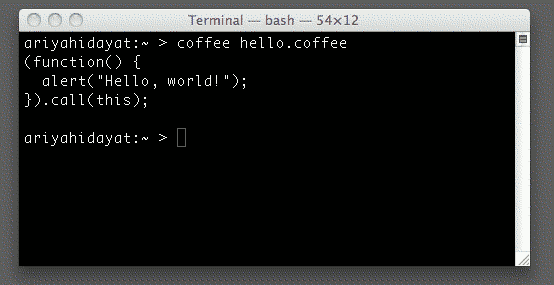

command line CoffeeScript

CoffeeScript seems to be picking up some momentum these days. No doubt, it is very valuable to help writing cleaner code.

The command-line choices to run CoffeeScript compiler right now are either using Rhino (jcoffeescript) or using NodeJS. While I love NodeJS, seems that it is an overkill to require the entire NodeJS stack/infrastructure/package manager to invoke CoffeeScript compiler.

The solution is to use V8, the powerful JavaScript engine, with a little binding so that it can access file system. This is exactly filejs, something I have shown before, e.g. to invoke JSLint from command line.

Combining filejs and CoffeeScript is terribly easy. Just follow these steps.

Note: filejs does not support Windows yet. Sorry.

First of all, if you have not done it, build filejs. Go to the X2 repository, it is under the javascript/filejs folder. Open the included README.TXT and follow the instructions on how to build V8 and filejs.

After you build it, copy both filejs executable and coffee.js to somewhere in your PATH. Usually I stash that kind of stuff in ~/bin and ensure that ~/bin is in my PATH.

Now get coffee-script.js (the CoffeeScript to JavaScript compiler) and store it somewhere, e.g. ~/bin again.

Create a new file called coffee, which has the following one-line content:

filejs ~/bin/coffee.js $1

Make that file executable and then save it to ~/bin (again).

Open coffee.js and modify the value of the compiler variable to point to your coffee-script.js. Note: this must use the absolute path name.

Now you can do the following:

coffee hello.coffee

If hello.coffee is your script written in CoffeeScript, the converted JavaScript version will be dumped to the standard output.

Feel free to tweak coffee.js so that it understands and passes various CoffeeScript compiler options!

Fun, isn't it?

Saturday, January 01, 2011

X2 from Ofi Labs: wrap-up 2010

It got started when I needed a new home for my examples. It has even a nice logo.

It got started when I needed a new home for my examples. It has even a nice logo.

sensor

accelerometer viewer for Maemo 5 (Nokia N900).

bouncing ball, where the gravity affects the movement of the ball.

box of marbles, where the gravity affects a bunch of colored marbles.

combining accelerometer and network to do inter-device marbles transfer.

motion and orientation for web applications.

web-based version of marble box.

widgets

morphing clock, where the transition between the digital and analog version is a kind of morphing effect.

qpalette viewer so you know which color is which one.

graphics

fast approximation of Gaussian blur to create a blurry drop shadow.

command-line capture tool to save maps from OpenStreetMap, MapQuest and Ovi Maps.

simple tool to list all chunks inside a PNG image.

webkit & javascript

file processing, including using jslint, in command-line using JavaScript.

play Canvas-based game as normal desktop app.

offline, command line beautifier for JavaScript code, utilizing Qt Script.

another variant of the beautifier, this time using V8.

minimalistic editing widget for JavaScript code, with custom syntax highlighting.

white background is boring? just try some color inverted web pages.

detect the closest link to ease following it on a touch device.

Canvas pixel manipulation for plasma effect.

network

simple proxy server for HTTP, in 100 lines.

tracenet: trap all network requests+replies to show them with Speed Tracer.

filterproxy: another variant of the proxy server with added URL filtering feature.